Salesforce Bulk API 1 was released way back in 2009 and the Bulk API 2.0 was released in 2018, and they are both still officially supported. At a high level they are similar, in that both of them provide a way to send large CSV files to Salesforce and rapidly load them. The Bulk API 2.0 was intended to make the API easier to use - it brings authentication into line with other APIs, and gets rid of the need to break data into batches before uploading it.

The simplifications that Bulk API 2.0 brings mostly benefit developers who are using the API directly. But most actual Salesforce users won't be hitting the API directly, they'll be using it via an ETL tool like Mulesoft, Talend, Azure Data Factory, or even the old Data Loader.

The Bulk API 2 also has some disadvantages, in that it is missing some of the features that Bulk API 1 had. So here I am going to make the case that for most Salesforce users, the Bulk API 1 is actually better than Bulk API 2.0:

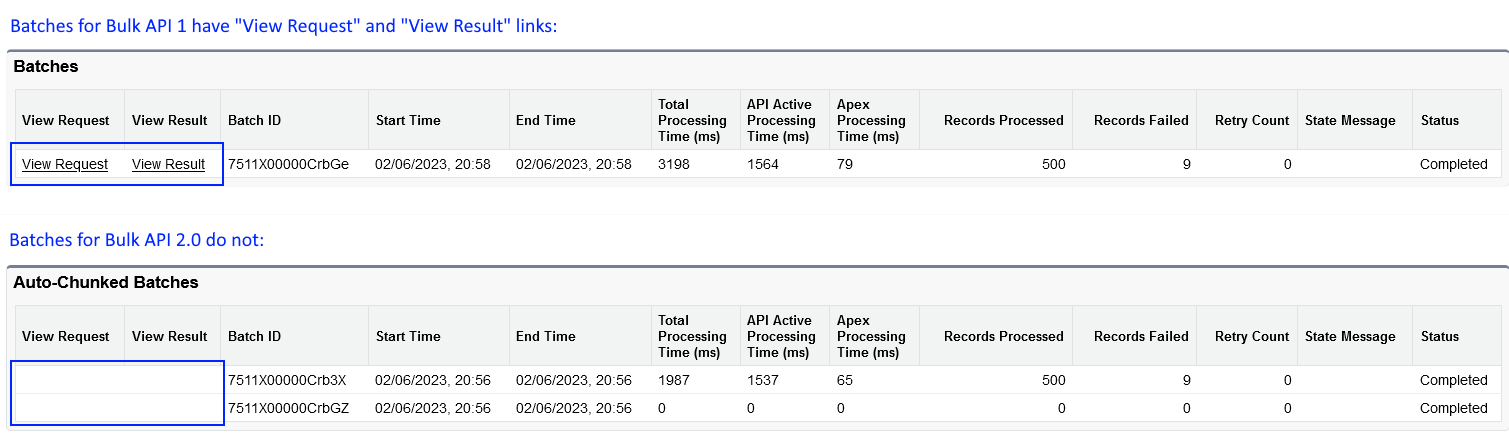

- When you do a Bulk Load in Salesforce, its important to be able to see the results because if you've got failed rows, the error messages will be in the Bulk Load results files. For Bulk API 1, these are easily accessible on the Bulk Data Load Jobs page. For Bulk API 2, you can't get the results via the UI, they are only obtainable via API calls. If you're using an ETL, usually it will download the results for you, but its still very useful to be able to get them directly via the UI sometimes.

Bulk API 1 lets you choose between Serial and Parallel for loads. On some objects with a lot of lock contention - e.g. CampaignMember, its pretty vital to be able to load in serial mode otherwise you tend to just get a locking avalanche. Bulk API 2.0 does not have a serial option. Looks like they trailled one a few years ago but as of now it is still not generally available. Official advice at the moment if that if you need serial mode you have to use Bulk API 1

Bulk API 1 lets you choose the batch size. Salesforce places a 5 minutes limit on processing time for a batch, and if you go over that limit the batch is paused and sent to the back of the queue, and if this happens repeatedly the batch is eventually failed. If you have an object with lots of automation such as Triggers and Flows, the 10,000 record batches that Bulk API 2 uses will probably be too big and you may well start hitting the batch time limits. With Bulk API 1 you can tune your batch sizes to avoid this. With Bulk API 2, you can't.

Another, perhaps more important, reason that you might want to set a batch size: Salesforce usually processes Bulk Loads in 200 record chunks. Note that this is independent of batch size. 200 records is a hard limit on the maximum number of records Salesforce can process as one 'transaction'. Within that transaction (chunk of 200 records) various limits apply, such as the maximum allowed number of SOQL queries. If you have a lot of triggers, you may well find that processing a chunk of 200 records hits some of those limits. We can't directly change the chunk size (its always 200) but we can force a Bulk Load to process smaller sets of data by setting the Batch Size to a number under 200. e.g. if you choose batch size 50 Salesforce will process the records in 'chunks' of 50 because thats what is being fed to it. Sometimes this is a very important technique to avoid hitting SOQL limits. Some people may say "you shouldn't ever need to do this because in a well engineered Salesforce org you should be able to load 200 record chunks without hitting limits". But in reality, this definitely happens sometimes and in those scenarios being able to set the batch size to a small number like 50 is invaluable. You can do this with the Bulk API 1, but you can't do it with Bulk API 2.0